Online Hate Speech with Offline impact: how the Internet is Exacerbating the Problem of Hate

Article

The internet has brought advancements in information consumption, connection and expression. It has democratised access to knowledge, improved communication and connection, and created virtual communities and e-commerce opportunities. It promised to connect the world, which it did to a large extent, but has also been the cause for disconnect between people.

The internet connects people through the formation of groups, using the human need for a sense of belonging. As humn beings, we have the need to be part of a group and prefer to surround ourselves with people that think like us, this is what primarily drives us in our interactions with others. The unifying factor of the group can be anything and the unity brings many appealing features: a sense of belonging, understanding, and a feeling of acceptance and support.

Although this unity can be highly beneficial for individuals in finding like minded people, some of these online channels are actively used by people and entities with malicious intent, who use manipulation through online communication. Increasingly this online manipulation has been used to extend the spread and reach of hate speech. The rise of online hate speech is a growing concern, causing a tremendous amount of harm.

What is hate speech?

Despite the large amount of discussion and research on hate speech, there is still no universally agreed upon definition of the term. Tilt uses the definition of hate speech as bias-motivated, hostile, and malicious language targeted at a person or group because of their actual or perceived innate characteristics, such as race, colour, ethnicity, gender, sexual orientation, nationality, religion, or political affiliation. It can take many forms, including name-calling, slurs, and other forms of verbal or written abuse, as well as nonverbal forms of expression such as hate symbols and images. Hate speech can also include calls to actions, mostly of a violent nature. Although face-to-face interactions are not free from hate speech, it is much more prevalent online due to the anonymity of online communication.

Online hate

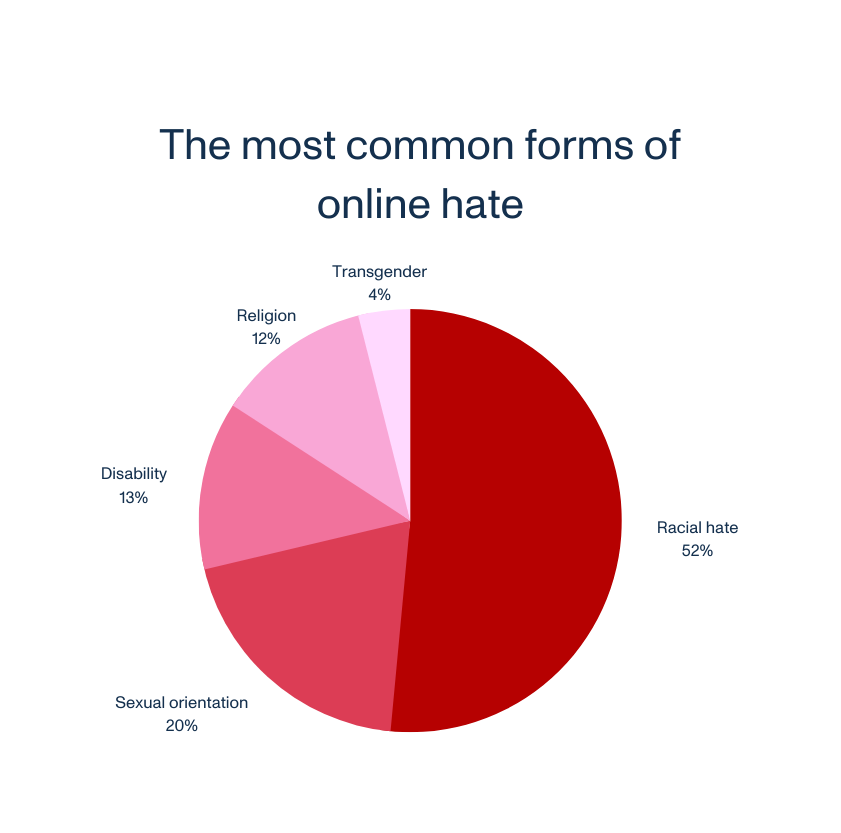

There are various types of hateful speech on the internet. According to recent research, online racial hate crimes are the most common form of all online hate crimes, accounting for 52% of all online hate crimes. This is followed by online hate crimes motivated by sexual orientation (20%), disability (13%), religion (12%), and transgender online hate crime (4%).

The most common form of hate speech, racism, has serious implications in the offline world. Racist comments are used to fuel hate crimes such as physical violence, harassment, and discrimination. Cyberbullying is another form of online hate speech characterised by the use of derogatory language. Both racism and cyberbullying influence happiness, economic prospects, trust, and the self-esteem of victims. Thus, online hate speech influences victims’ wellbeing, resulting in serious implications in the offline world. Therefore, it is important to remain vigilant of the consequences of these types of hate speech.

Moderating online hate

Although many countries have laws concerning hate speech, in many contexts enforcement of these laws is difficult, particularly in the online world. Although online platforms are moderating hate speech, there is not currently a reliable way to detect hate speech on different platforms besides highly labour intensive reviews of platform content. There are some efforts to automate the detection of hate speech, but they lack the ability to classify hate speech reliably. Moreover, not all platforms are moderating user-generated content. Twitter, for example, removed this moderation after Elon Musk acquired the platform. The removal of moderation has resulted in major negative consequences, namely an increase in hateful content.

The offline impact of online hate speech

Offline hate crimes are often preceded by online hate speech. Online extremist narratives have been linked to real-world hate crimes against both individuals and larger communities. For example, in 2019 an extreme-right terrorist in New Zealand attacked two mosques in Christchurch and shot a total of 51 people in 36 minutes.

What is unique about Tarrant’s attack is that he announced his attack 15 minutes beforehand on the platform 8chan, and live streamed his atrocity on Facebook. In doing so, he highlighted the weakness of such platforms to moderate content. Although the video was removed from Facebook within one hour, it was still widely shared. It was re-uploaded more than 2 million times on different social media platforms, and it remained easily accessible for another 24 hours after the attack.

Reducing online hate

Above-mentioned example shows the relation between online hate speech and offline hate crimes. What started as online hate speech resulted in the deadliest terrorist attack in New Zealand history. In order to combat this, more focus should be on the detection of hate speech, for the sake of the online (and potential offline) victims. We need a rapid and continuous approach to combat hate speech.

Monitoring online hate

Tilt’s hate speech detection model offers a valuable service to those trying to monitor and analyse online hate speech, toxicity and violence across various platforms (e.g. Twitter, Reddit, YouTube, Instagram). Our models are non-binary classification systems, ranking messages by level of severity. Content is categorised and ranked according to the aforementioned definition of hate speech. This classification enables users to quickly detect, rank and report hate speech.

Tilt helps organisations detect hate speech quickly and easily. With hate speech detection we help organisations save time conducting investigations and broaden the scope of their research. This allows for the swift detection of hate speech with minimum effort, to efficiently report and demand action from platforms to remove hateful content. Detecting hate speech thus contributes to the organisation’s sway and ultimately reduces online hate – as well as its offline impact.

Let’s talk

Let’s talk (Insights)

"*" indicates required fields